Cohelion, an All-in-one Data Warehouse Factory

By Rick F. van der Lans • published July 22, 2020 • last updated February 3, 2022

Numerous projects spend a lot of time integrating the tools themselves

Generally, many tools are required to develop a full-blown data warehouse environment, including ETL tools, database servers, master data management systems, metadata tools, and data quality tools. This results in numerous projects spending a lot of time integrating the tools themselves along with the time spent on integrating the data from different sources.

And then still, if everything is connected, two important features for business users are missing. First, if users detect incorrect data themselves, they cannot correct it. To fix the problem, they need to start a complex and time-consuming procedure. This causes users to opt to copy/paste the data from the report to a spreadsheet and correct it there. They then end up working with an ungoverned and unmanageable spreadsheet.

A second missing feature it that users cannot enter data themselves. They can only work with data provided by the data warehouse environment. For example, data managed by applications that cannot export their data, or is only available in spreadsheets, will never show up in the data warehouse. This implies that the business must then rely on an incomplete data set to make decisions.

Cohelion is a highly unique product and therefore difficult to compare with other data warehouse tools

Cohelion converts raw data to consumable business data

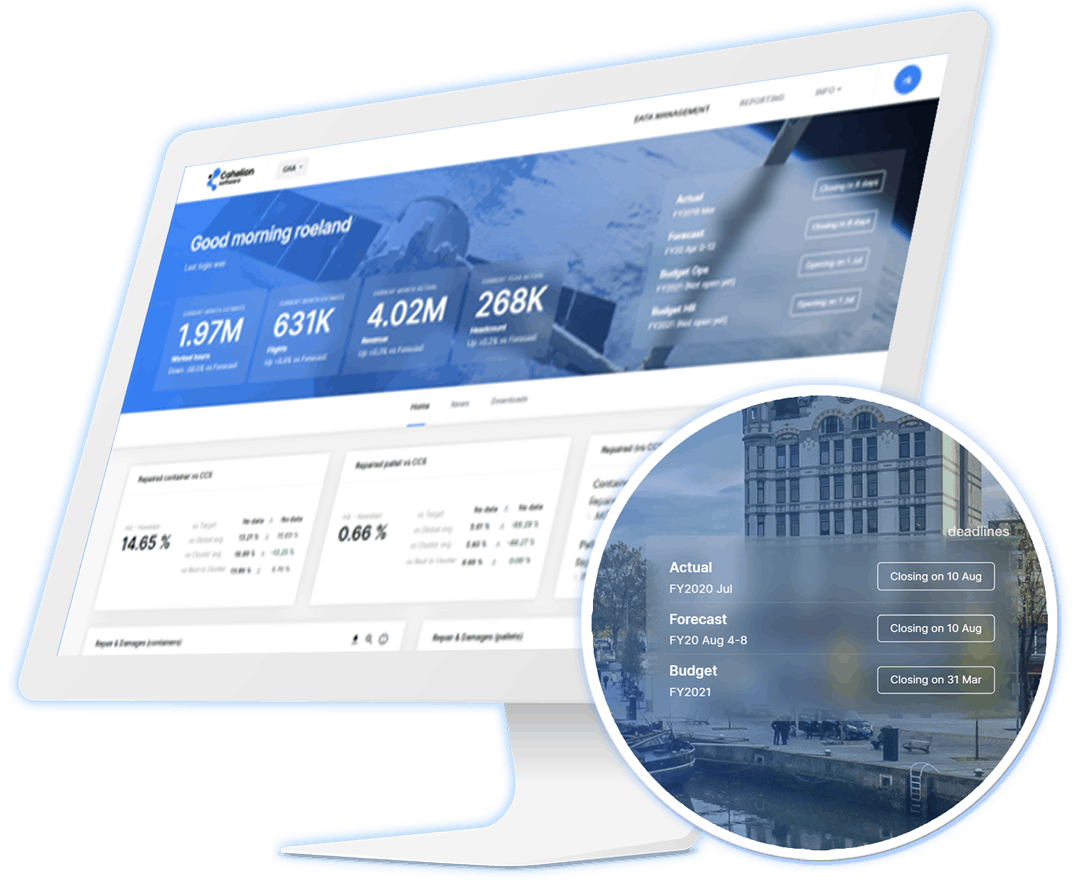

The Cohelion product works differently. Cohelion is like an all-in-one data warehouse factory. As an ordinary factory in which raw materials are processed and assembled into finished products, Cohelion similarly converts raw data to consumable business data. To enable this, it supports an integrated set of features for data loading, data integration, master data management, metadata, data transformations, along with correcting and entering data by business users.

Follow the flow of data

The easiest way to describe the product is to follow the flow of data from where it enters the product, how it passes through the product, and how it is made available for reporting and analytics.

Like most other data warehouse products, data can be extracted from a wide variety of sources and loaded into Cohelion’s own data storage area. This area has characteristics of a data lake. Data is never removed from it, like in a more traditional staging area. The area is implemented with a SQL database and the table structures are identical to the structure of the loaded data. These are called raw tables. When data from multiple sources with different data structures are loaded from the raw tables, the data is not integrated. For example, customer data coming from a sales system, is stored in a different table from the sales data coming from the finance system.

Next, the data is copied from the data storage area to fact tables in an area that resembles a data warehouse. Customers do not design these fact tables, they are all created automatically by the product.

When the data is loaded into the fact tables but is known to be incorrect or incomplete, business users can correct or complete that data via a web application. No need to wait for their IT department to adjust the ELT process. The system contains a full audit trail that registers when those updates were made and by whom. Cohelion keeps track of both the original and the corrected data.

In the next step data is integrated based on available master data. The master data mapping can be entered in many different ways, ranging from mass loading from other systems to manual insertion. Users can define which data values are incorrect and what their real values should be, they can indicate that region X in the sales system is actually region Y in the finance system, they can define hierarchies of data elements, via a drag-and-drop interface. This embedded master data module is multi-domain.

The master data is used in the next step of the data factory when data is copied to analytical cubes. The cubes contain integrated data. Integration takes place by joining tables and/or is based on the available master data. These cubes are designed to support reporting and analytics, and can also be used directly by self-service BI tools like PowerBI, Tableau, and Qlik.

Users can use Cohelion to analyze the data origin. When a user clicks on a specific value in a report or diagram, an analysis of the data lineage is shown. If the value has been changed manually, that fact will also be shown. Users can also see what the original (incorrect) value was.

The entire product is SaaS-based and can run on private or public cloud platforms.

KPI Forecasting workflow

One of the interesting features is that on any KPI that collects actual data, a forecast or budget scenario can be defined complete with an extensive approval workflow. Users can even time travel and go back in time to see how well the forecasts matched the real numbers.

Cohelion monitors its own data factory. In fact, there is an Outlook-like agenda that shows when each extraction and copying process will start plus indicating which ones have started, succeeded, or failed. This allows users, for example, to see whether the new sales data from Canada has already been loaded and processed.

I wouldn’t mind if other vendors implemented some of Cohelion’s features

To summarize, Cohelion is a highly unique product and therefore difficult to compare with other data warehouse tools. This may not be the right product for companies with large existing data warehouse environments. But for organizations that don’t already have a data warehouse and are struggling with extracting and integrating data from all their reporting and analytical systems, this may be the right solution. It could also be of interest to business departments that need to quickly develop a data warehouse. In this case, the existing, IT-managed, central data warehouse is likely to become a source for the Cohelion product.

Note: Recommendation to other vendors, please check-out this product. I wouldn’t mind if you implemented some of Cohelion’s features

About Rick F. van der Lans

Rick is a highly-respected independent analyst, consultant, author, and internationally acclaimed lecturer specializing in data warehousing, business intelligence, big data, and database technology.

In 2018 he was selected the sixth most influential BI analyst by Onalytica.com.

He has presented countless seminars, webinars, and keynotes at industry-leading conferences. Over the years, Rick has written hundreds of articles and blogs for newspapers and websites and has authored many educational and popular white papers for many vendors.

He was the author of the first available book on SQL, entitled “Introduction to SQL”, which has been translated into several languages with more than 100,000 copies sold. In 2012, he published his book “Data Virtualization for Business Intelligence Systems”.